LLMs vs Datasets: What’s the Difference?

Artificial intelligence is changing the way we interact with technology, and at the heart of many of these advancements are Large Language Models (LLMs) and datasets. If you’ve ever played around with ChatGPT or Google’s Bard, you’ve already seen an LLM in action. But what exactly is an LLM, and how does it differ from the dataset it’s trained on? Let’s break it down in an easy-to-digest way.

1. What is a Large Language Model (LLM)?

Think of an LLM as a super-smart, AI-powered autocomplete system. It takes in words, processes them using complex mathematical patterns, and predicts what comes next in a meaningful way. But LLMs are more than just fancy text predictors – they can analyze, summarize, translate, and even generate entirely new pieces of content.

An LLM is essentially a machine learning model trained on vast amounts of text data. It uses deep learning techniques, particularly neural networks, to understand and generate human-like language. Some well-known examples include:

- GPT-4 (ChatGPT) – The model behind OpenAI’s chatbot.

- BERT (Bidirectional Encoder Representations from Transformers) – A Google AI model used in search engines.

- LLaMA (Large Language Model Meta AI) – Meta’s open-source AI model.

2. What is a Dataset?

A dataset, on the other hand, is simply a collection of data. In the context of AI and LLMs, a dataset consists of millions (or even billions) of words, phrases, and sentences collected from various sources. These can include books, articles, Wikipedia entries, websites, and even social media posts.

The dataset is what fuels an LLM’s learning process. Think of it like this:

- The dataset is a massive library of books.

- The LLM is a student who reads all those books and learns patterns, structures, and relationships between words.

Datasets come in different shapes and sizes, such as:

- Common Crawl – A publicly available dataset that scrapes vast amounts of text from the internet.

- Wikipedia – A popular source of factual information used in training many AI models.

- BooksCorpus – A dataset composed of thousands of books, used for training models in literary language understanding.

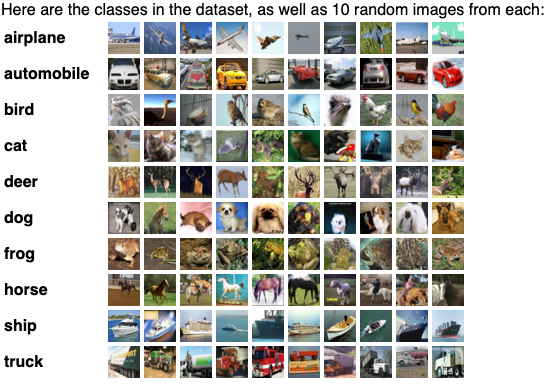

Let me give you a real-life example of a dataset and how I have used it. I wrote a Python program to identify and tag a collection of my images by training a model. What helped me was an existing dataset that contained 60,000 images in ten classes. The one I used was called the CIFAR-10 dataset. I incorporated this dataset and ran a test against my images to see if they were identified properly – BTW this is called inference. If you are interested in the python code, leave a comment at the end of the post.

Check out these other datasets that could maybe spawn some ideas for personal projects.

3. The Key Differences

Now that we’ve defined both terms, let’s highlight the key differences:

| Feature | Large Language Model (LLM) | Dataset |

|---|---|---|

| Definition | An AI model that generates human-like text | A collection of raw text data |

| Purpose | To process, understand, and generate language | To provide material for training an AI model |

| Functionality | Learns from data and makes predictions based on context | Stores information but does not process or predict |

| Example | GPT-4, BERT, LLaMA | Wikipedia, Common Crawl, BooksCorpus |

| Can it generate text? | Yes, it creates coherent and context-aware responses | No, it only provides existing text samples |

4. The Relationship Between Datasets and LLMs

A dataset is the foundation on which an LLM is built. Without datasets, LLMs wouldn’t have anything to learn from. However, the dataset alone doesn’t provide intelligence—it’s just raw material. It’s the LLM that processes and refines this information to make sense of it and generate new, human-like responses.

Think of it like baking a cake:

- The dataset is the ingredients—flour, sugar, eggs, etc.

- The LLM is the baker who mixes those ingredients together, bakes them, and produces a delicious cake.

5. Why Does This Matter?

Understanding the difference between datasets and LLMs is crucial for anyone interested in AI. It helps clarify how AI models work, why they sometimes get things wrong (garbage in, garbage out), and why ethical considerations around training data are so important.

For example:

- Bias in datasets – If the dataset contains biased information, the LLM will learn and reproduce those biases.

- Data privacy concerns – If an LLM is trained on sensitive or copyrighted content, it can raise legal and ethical questions.

- Data freshness – Since LLMs don’t continuously update themselves, they might not have the latest information unless retrained with new datasets.

6. Final Thoughts

To sum it up:

- A dataset is just raw text—it doesn’t “think.”

- A large language model takes that dataset, learns from it, and becomes a functional AI assistant.

- The quality of an LLM depends heavily on the quality of the dataset it’s trained on.

Hopefully, this clears up the difference between these two essential AI components. If you ever hear someone say, “The AI knows everything,” just remember: the AI is only as good as the data it learned from!

Got questions or thoughts on LLMs and datasets? Drop them in the comments below!